GAMMA Group Wins Best Paper Award at ACM Conference

Researchers affiliated with the Geometric Algorithms for Modeling, Motion, and Animation (GAMMA) group at University of Maryland (UMD) have received a Best Paper Award for their work on a data-driven model and algorithm that can perceive the emotions of individuals based on their walking motion or gait.

The paper, “Learning Gait Emotions Using Affective and Deep Features,” was presented at the 15th Annual Association for Computer Machinery (ACM) SIGGRAPH Conference on Motion, Interaction and Games (MIG 2022), held from November 3–5 in Guanajuato, Mexico.

It describes an innovative method for classifying the emotions of individuals based on 3D video captures of their walking and other physical traits. Ultimately, the researchers say, this information could lead to new insights for interdisciplinary research combining artificial intelligence and public health—including novel research on aging, rehabilitation, and depression that explores the relationship between mental health, gait-based emotions and age.

The paper’s authors—Tanmay Randhavane, Uttaran Bhattacharya, Pooja Kabra, Kyra Kapsaskis, Kurt Gray, Dinesh Manocha and Aniket Bera—all have current or recent ties with GAMMA.

The GAMMA group was founded by Manocha and Ming Lin at the University of North Carolina (UNC) in the mid-1990s. After Manocha and Lin joined the UMD faculty in 2018, the group moved to Maryland.

Randhavane was Manocha’s Ph.D. advisee at UNC, while Kapsakis and Gray were close GAMMA collaborators affiliated with UNC’s Department of Psychology and Neuroscience. Kabra is a current Maryland graduate student; Bhattacharya just graduated with his Ph.D. in computer science and has joined Adobe Research; and Bera is a former UMD research faculty member who is now an associate professor at Purdue University.

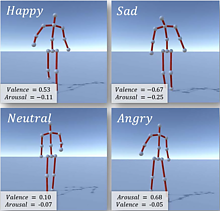

In their award-winning paper, the researchers identified four emotional labels—happy, sad, angry and neutral—vs. the walking pattern of study participants over a period of time.

They then compared specific posture features to a participant’s gait using emotion characterization in visual perception benchmarks that are commonly seen in psychology literature. This includes observing upper body features such as the area of the triangle between the hands and the neck, the distance between the hand, shoulder and hip joints, and angles at the neck and back.

It was discovered that certain body joints are more prone to change depending on the emotion of the walker. For example, participants noted as happy or angry activated responses in the foot and hand joints, whereas those that were sad had activation points in the back and head joints, which correspond to psychological findings of slouched posture being associated with sadness.

Using this data, the researchers used deep learning algorithms to improve the accuracy of identifying affective features—human movement that correlates with emotive states—by almost 20%.

The information derived using this method has also been applied to social robotics, autonomous driving, and multiple applications in virtual and augmented reality including virtual character and avatar generation tools, and has been published in multiple papers at leading venues. GAMMA is active in all of these areas, with multiple projects in emotion recognition and affective agents in immersive technologies highlighted on their website.

The team’s work has also been featured in local and national media, including stories on AI-driven emotional assistants, and a feature article on AI, gait and emotion in Forbes.

—This article uses portions of a news release published by the Department of Electrical and Computer Engineering