UMD Team Unveils Innovative Camera System

University of Maryland researchers recently unveiled a new camera system that mimics tiny movements in the human eye, offering sharper resolution and more detail for computer vision applications used in object recognition software, autonomous vehicles and robotics.

Their technology—known as an event-based perception system—can achieve robust performance in both low and high-level vision tasks, while simultaneously maintaining microsecond-level reaction time and stable texture.

An event camera, also known as a neuromorphic camera, is an imaging sensor that responds to local changes in brightness. They do not capture images using a shutter as conventional (frame) cameras do. Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

But event cameras can miss pixels from edges that happen to be in the same direction as the camera motion, the UMD researchers say. Biological systems, including humans, can address this problem with minuscule, jiggling eye movements known as microsaccades.

The UMD team, working with researchers in China, has been able to replicate this process in a unique way. Instead of jiggling the camera, they are jiggling the bundle of light rays entering the lens, placing a rotating wedge prism in front of the aperture of an event camera to redirect light and trigger this process.

Their research was featured in Science Robotics, a peer-reviewed scientific journal published by the American Association for the Advancement of Science.

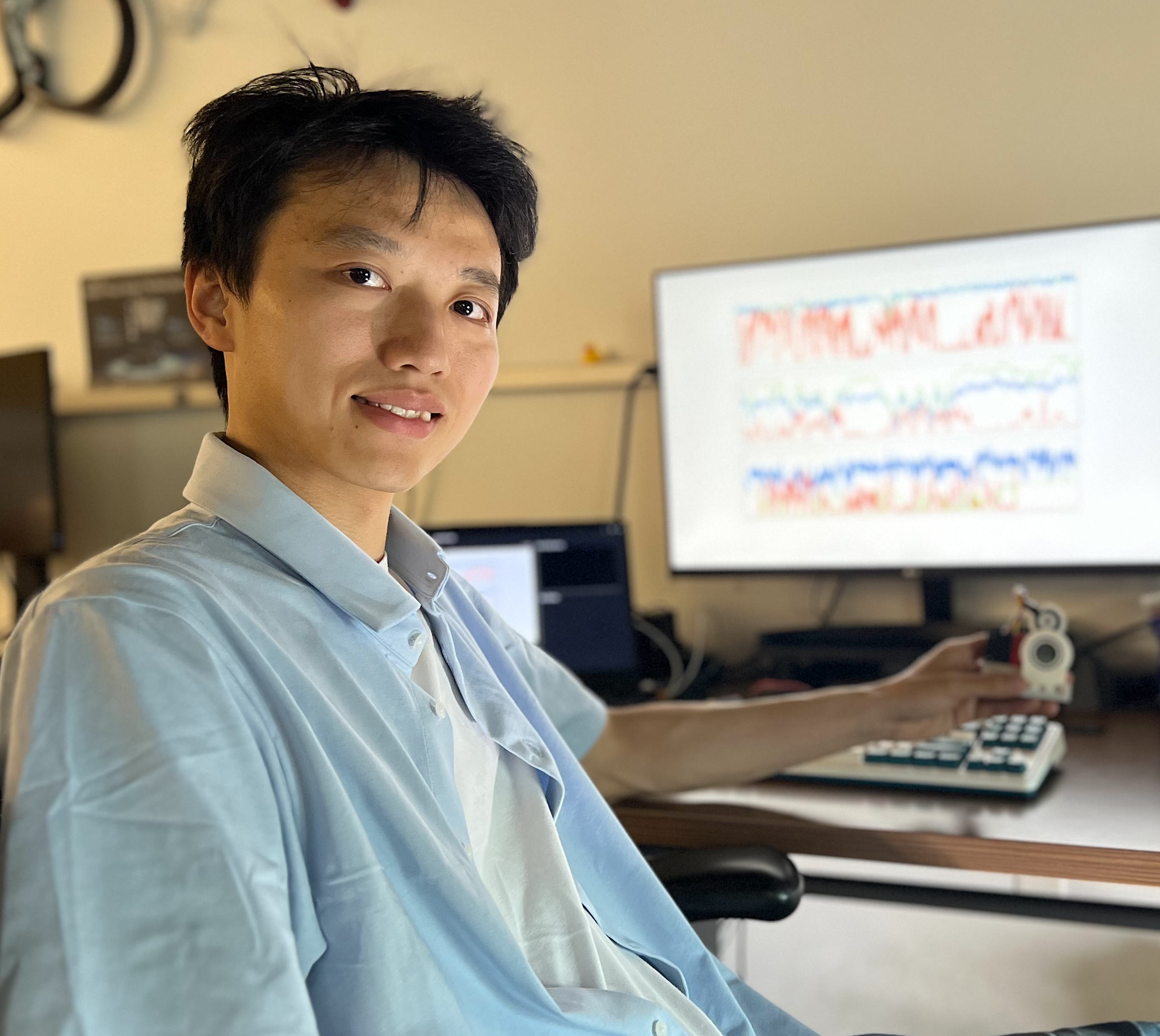

The hardware device and software solutions highlighted in the published paper is called AMI-EV, which stands for artificial microsaccades-enhanced event camera, says the paper’s lead author, Botao He (in photo), a second-year doctoral student in computer science at the University of Maryland.

He explains the technology in a series of short videos he produced that can be viewed here.

His adviser at Maryland and a co-author on the paper is Yiannis Aloimonos, a professor of computer science with an appointment in the University of Maryland Institute for Advanced Computer Studies (UMIACS). Joining He and Aloimonos on the project are Cornelia Fermüller, a research scientist in UMIACS; graduate student Jingxi Chen; and Chahat Deep Singh, who completed his Ph.D. at Maryland last year.

The UMD team worked closely with Ze Wang, Yuan Zhou, Yuman Gao, Kaiwei Wang, Yanjun Cao, Chao Xu and Fei Gao, all from Zhejiang University; and Haojia Li and Shaojie Shen from the Hong Kong University of Science and Technology.

Funding for their work came in part from a National Science Foundation grant on neuromorphic engineering that Fermüller is the principal investigator of, with additional support from UMD’s Department of Computer Science, UMIACS, the Institute for Systems Research, the FAST Lab at Zhejiang University and Aerial Robotics Group at the Hong Kong University of Science and Technology.

—Story by UMIACS communications group